There is some text in the MSFT document but it there’s so little difference here that it’s negligible. It is supported by all versions of Hyper-V, but only in the Enterprise/Plus editions of vSphere 5.1. ODX greatly reduces the time required for file operations on compatible SANs. That has a big impact on storage performance, and therefore the performance of guest services/applications.Īnd don’t forget that hackers now have a way to use the architecture of VMDK to break out from the guest OS, something that is a genuine threat in hosting or cloud computing (FACT).Įven though Hyper-V has the edge on physical LUNs, I’m going to ignore it because I hate passthrough/RDM disks. VHDX is also the only 4K aligned virtual hard disk. The Hyper-V VHDX is more suited to bigger applications. Microsoft’s VHDX scales out to 64 TB whereas the VMware VMDK is limited to 2 TB. Using passthrough or RDM disks is an oxymoron in the cloud because it completely removes the element of self-service. In a cloud, you need scalable (for big data) and flexible storage solution. vSphere requires VAMP, a feature only in Enterprise and Enterprise Plus. You can choose which solution scales out to be the best enterprise or cloud solution.Ī massive piece of the investment for Microsoft was storage, trying to scale out, offer new solutions, and to alleviate problems that exist in all sizes of business.Īll versions of Hyper-V support guest MPIO by using the SAN manufacturer’s own DSM/MPIO solution, just as they would with a physical server, but by using NPIV. vSphere maxes out at 2 nodes in a quest cluster that uses Fibre Channel storage. Speaking of which, WS2012 Hyper-V supports guest clusters with up to 64 nodes with iSCSI, SMB 3.0, and Fibre Channel storage. You minimize risk by using guest clusters. The fact is that you save money (hardware, licensing, power, space) by scaling up first, and then out. OK, I dare VMware to reduce their max specs down to a max of 20 VMs per host. That’s quite dense.Įxpect some FUD that goes like “having 133 VMs on a host is too risky”. That would mean there would be 133-134 VMs on each host in the cluster. I’d really have all 8,000 VMs balanced across the 64 nodes, but there would be the equivalent of 60 nodes active.

I haven’t seen best practices, but I’d probably want the equivalent of 4 failover hosts in a 64 node cluster.

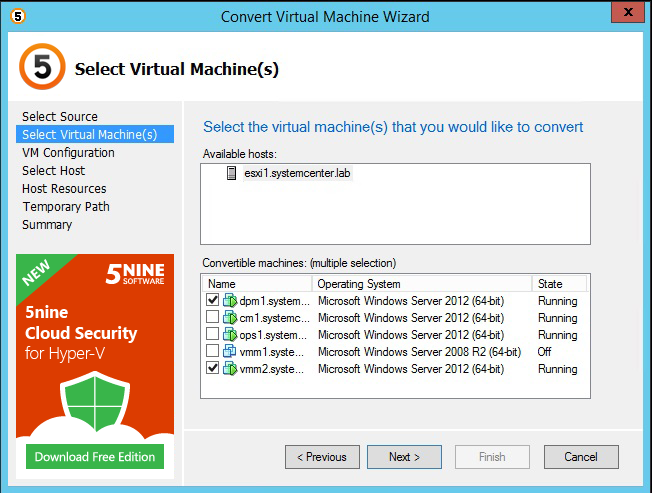

#5NINE MANAGER NOT ADDING SERVERS FREE#

And remember that ESXi free does not include Failover Clustering you must have vSphere to have failover (application up time). That’s makes Hyper-V much more scalable for public/hosting and private clouds. A host can have twice the number of VMs and physical RAM. Hyper-V scales out to over twice the cluster size/capacity of vSphere.

VSphere 5.1 Enterprise supports up to 32 vCPUs in a VM. For example, you can’t just let a VM have lots of vCPUs you need to make the VM’s guest OS aware of the NUMA of the underlying hardware, which is what Microsoft has done. Microsoft made a huge investment in WS2012 Hyper-V to achieve these scale up/out numbers it wasn’t just a matter of editing some spreadsheet.

#5NINE MANAGER NOT ADDING SERVERS WINDOWS#

Windows Server 2012 Datacenter edition Hyper-V, with it’s unlimited free VOSEs on the licensed host.Windows Server 2012 Standard edition Hyper-V, with it’s 2 free VOSEs on the licensed hosts (which can be stacked on that host via over-licensing).Keep in mind that the Hyper-V features and scalability are identical across all of the 2012 editions: Actually, Microsoft has already done quite a bit of that for us with a new comparison document that was released overnight. Instead, let’s just compare numbers and features. Given the facts, you have to question that sort of opinion. I’m not going to foolishly declare vSphere are only being suitable for SMEs. Hyper-V has scaled out again, and VMware has announced vSphere 5.1. Of course, things have changed since then. In a previous post, I documented the comparison of Windows Server 2012 Hyper-V with vSphere 5.0 as it was back at TechEd 2012 in June. Time to kick another wasp nest! I got so many nice comments after the last time

0 kommentar(er)

0 kommentar(er)